|

I am a Staff Engineer at Samsung Research, where I develop on-device AI models. I did my Ph.D. in Artificial Intelligence at Seoul National University, where I was advised by Ernest K. Ryu and M.S. in the Mathematical Sciences at Seoul National University and B.S. in Mathematics Education at Dankook University. Also, I worked as a research scientist intern at NAVER AI Lab and KRAFTON AI. Here is my CV. Email / Google Scholar / X / Github / LinkedIn |

|

|

I am interested in Language Models, Multi-modal Learning, Information Retrieval, and Clustering. My research aims for a fundamental understanding of these models to tackle inherent ambiguity. Some papers are highlighted. |

|

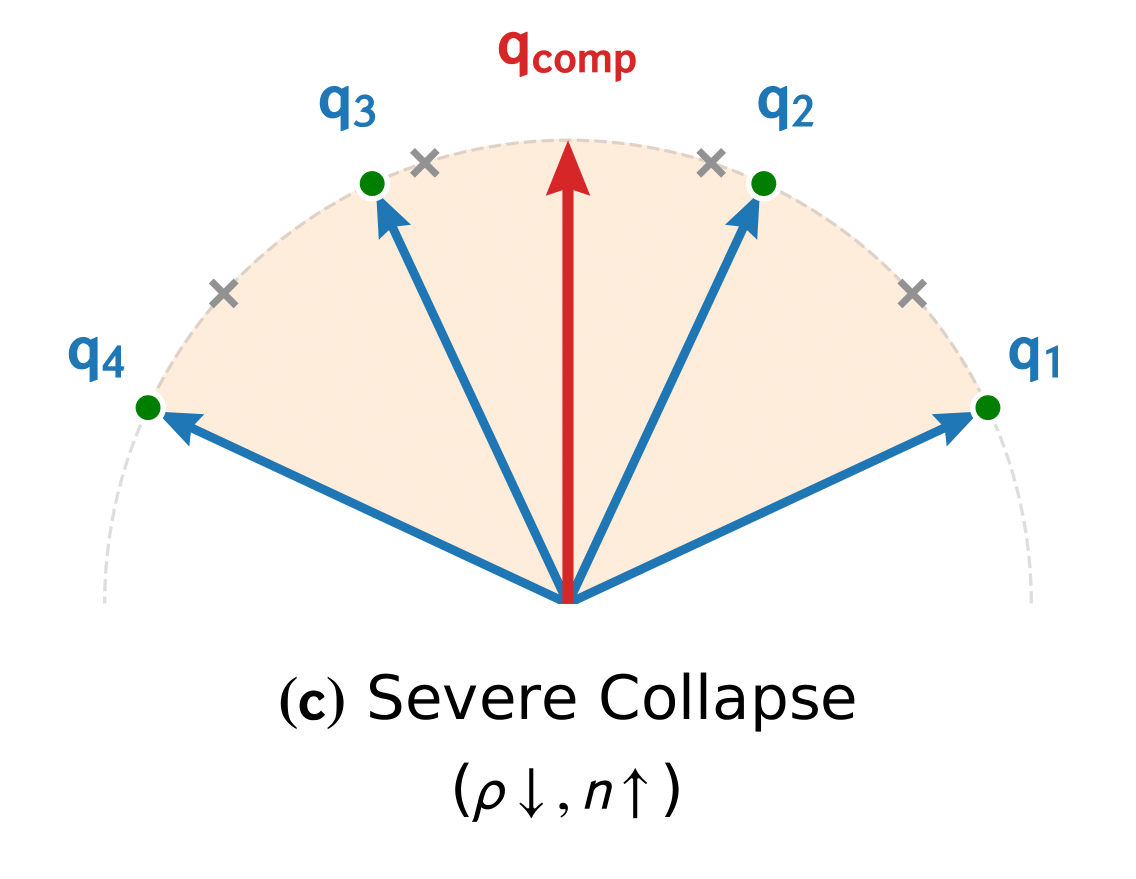

Sehyun Kwon, Sahngmin Yoo Preprint, 2026 Providing theoretical and empirical evidence of the inherent limitations of dense retrieval for handling compositional intents. |

|

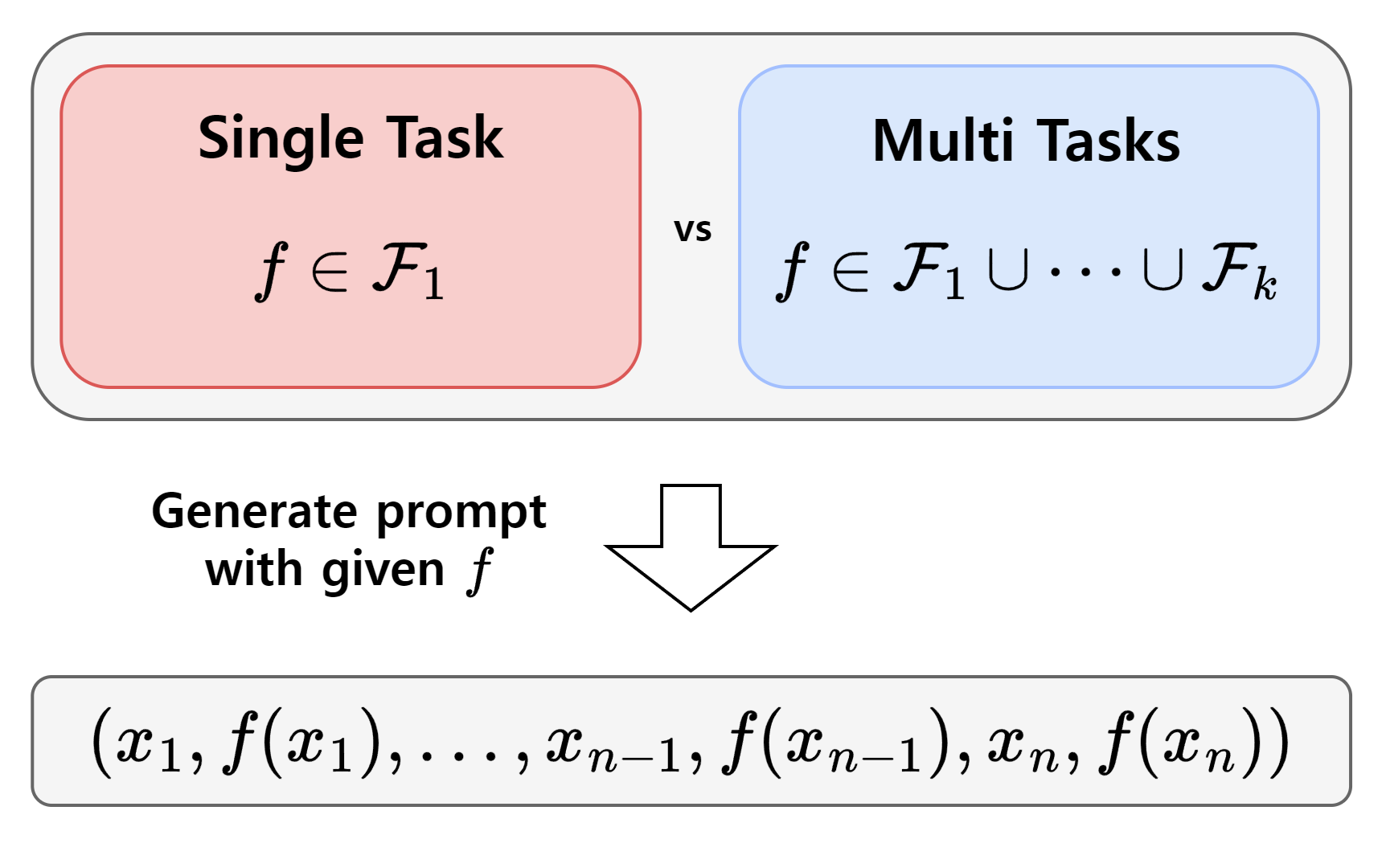

Jaeyeon Kim*, Sehyun Kwon*, Joo Young Choi, Jongho Park, Jaewoong Cho, Jason D. Lee, Ernest K. Ryu Transactions on Machine Learning Research (TMLR), 2025 paper / code Revealing that training on multiple diverse In-context Learning tasks simultaneously shortens the loss plateaus, making each task easier to learn. |

|

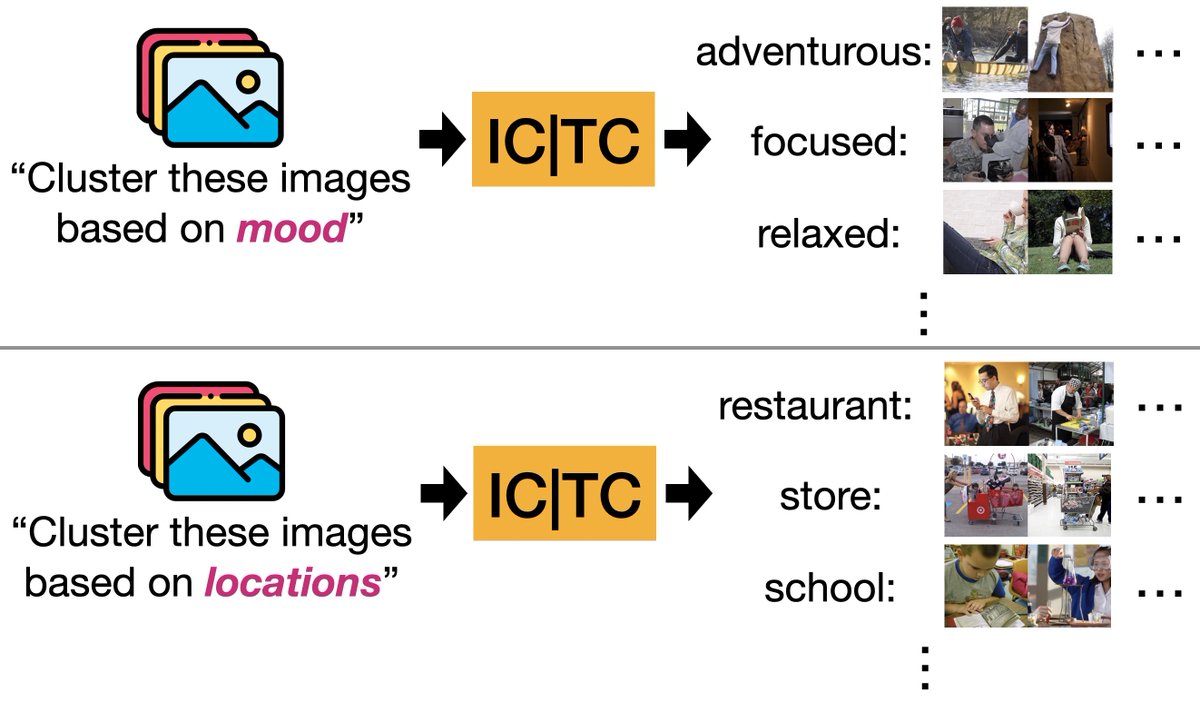

Sehyun Kwon, Jaeseung Park, Minkyu Kim, Jaewoong Cho, Ernest K. Ryu, Kangwook Lee International Conference on Learning Representations (ICLR), 2024 paper / code / summary1 / summary2 Prosposing the first framework to resolve the fundamental ambiguity of clustering by explicitly conditioning the process on user-provided text criteria. |

|

Sehyun Kwon, Joo Young Choi, Ernest K. Ryu International Conference on Machine Learning (ICML), 2023 paper / code We develop invariant representation learning methods using implicit neural representations for geometric transformations. |

|

Albert No, TaeHo Yoon, Sehyun Kwon, Ernest K. Ryu International Conference on Machine Learning (ICML), 2021 paper / code We prove that Wasserstein GANs with infinitely wide generators have no spurious stationary points in the optimization landscape. |

|

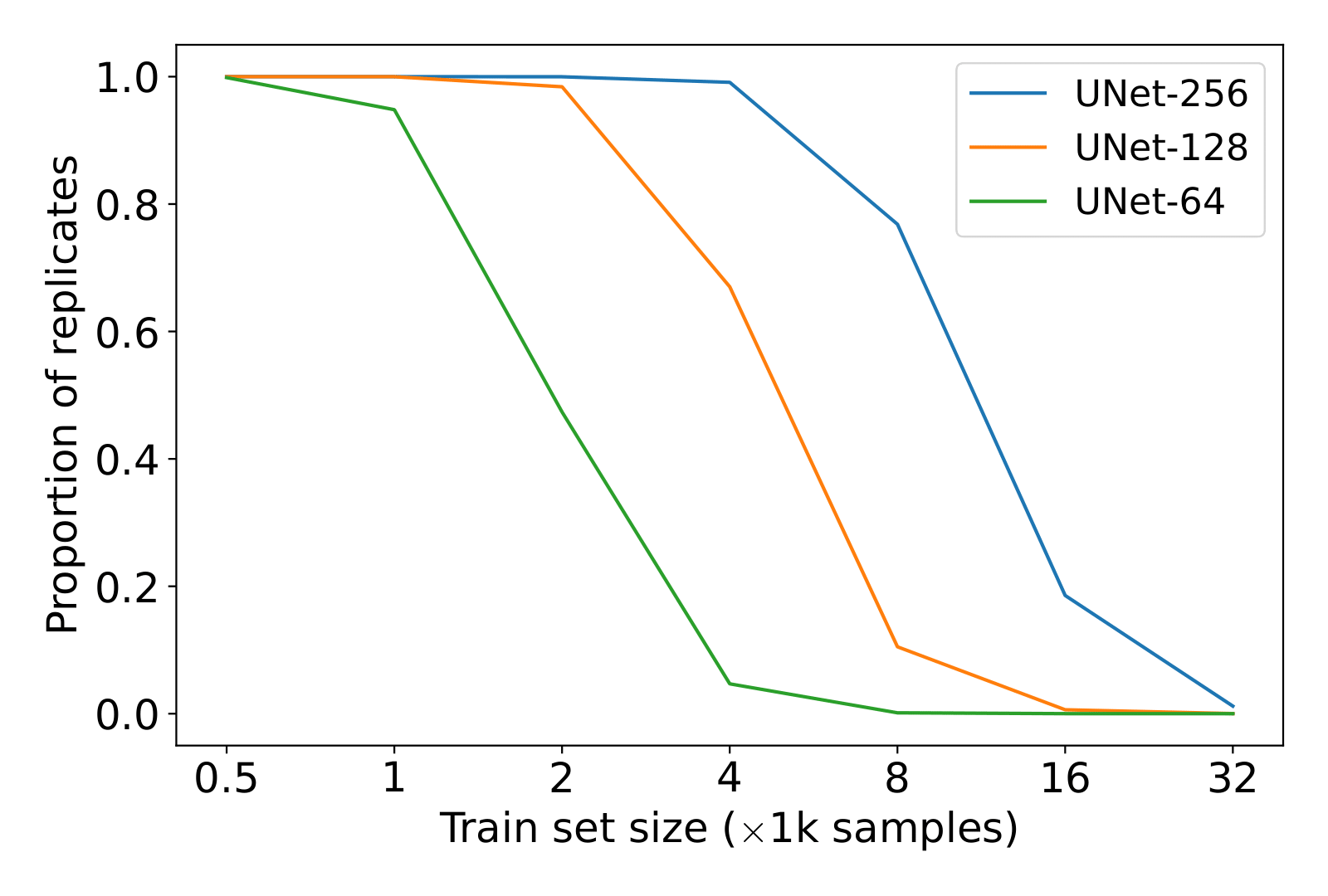

TaeHo Yoon, Joo Young Choi, Sehyun Kwon, Ernest K. Ryu ICML 2023 Workshop on Structured Probabilistic Inference & Generative Modeling, 2023 paper We study the memorization and generalization properties of diffusion probabilistic models. |

|

|

|

Youlchon AI Star Fellowship, 2024 Outstanding TA Award for the Mathematical Foundations of Deep Neural Networks course, Seoul National University, 2022 |

|

Website template from Jon Barron |